are expected to be similar in meaning.

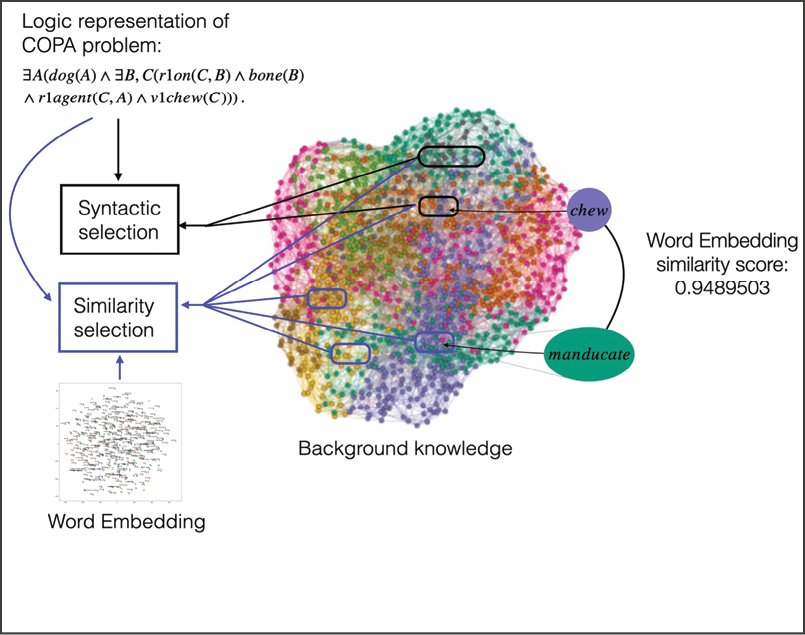

I am expanding upon the commonsense reasoning example displayed in Figure 1 for demonstrating how logical reasoning is used together with word embeddings. Figure 3 shows the logical representation of the first answer alternative, which is automatically constructed from the natural language sentence. This formula must be evaluated together with the formulas from the problem statement by a first-order logic theorem prover. As mentioned earlier, this is only possible provided that background knowledge about the world is added. I have described elsewhere how a logical formulation is developed in the context of a so-called Hyper Tableaus system that is being used as a reasoner.10 The activities of a reasoner are then being combined with background knowledge data that are compiled by knowledge graphs, such as, for example, ConceptNet, which is

a knowledge graph that connects words and phrases of natural language with labeled edges. Its knowledge is collected from many sources that include expert-created resources, crowd-sourcing, and games with a purpose. It is designed to represent the general knowledge involved in understanding language, improving natural language applications by allowing the application to better understand the meanings behind the words people use.11

10 Peter Baumgartner, Ulrich Furbach, and Björn Pelzer, "Hyper Tableaux with Equality, in Automated Deduction—CADE-21: 21st International Conference on Automated Deduction, Bremen, Germany, July 17-20, 2007, ed. Frank Pfenning, Berlin, DE: Springer Verlag 2007, pp. 492-507.

11 Robyn Speer, Joshua Chin, and Catherine Havasi, ConceptNet 5.5: An Open Multilingual Graph of General Knowledge," Proceedings of the AAAI Conference on Artificial Intelligence: Thirty-First AAAI

For starting the reasoning process, there is the need of a selection function that extracts the parts of the background knowledge that might help solve the problem. The figure depicts both a syntactic and a similarity selection function. The syntactic selection works with symbol names that occur in the formulas—it supports reasoning with the language of signs (notiones generales), while the similarity selection is concerned with the meaning of the symbols, or the language of words (notiones communes) by way of analyzing and evaluating word embeddings in targeted semantic spaces. Figure 3 shows that, according to the similarity score from the word embedding, the word "manducate" is selected due to its similarity with the word "chew," which is contained in the formula at hand.12

Conference on Artificial Intelligence 31/1 (2017), 4444-4451, here p. 4444.

12 The figure is adopted, in part, from Ulrike Barthelmeß, Ulrich Furbach, and Claudia Schon, "Consciousness and Automated Reasoning," in Proceedings of the 6th Workshop on Formal and Cognitive Reasoning, eds. Christoph Beierle, Marco Ragni, Frieder Stolzenburg, and Matthias Thimm, CEUR Workshop Proceedings

Figure 3:Automated reasoning on a commonsense problem, using large background knowledgeand a selection function to apply a word embedding.